A VPC endpoint enables you to create a private connection between your VPC and another AWS service without requiring access over the Internet. An endpoint enables instances in your VPC to use their private IP addresses to communicate with resources in other services. Your instances do not require public IP addresses, and you do not need an Internet gateway, a NAT device, or a virtual private gateway in your VPC. You can use endpoint policies to control access to resources in other services. Traffic between your VPC and the AWS service does not leave the Amazon network.

So, we can say, we use VPC Endpoint to access AWS services within a region using Private IP address.

There are two types of VPC Endpoint:

- VPC Gateway Endpoint

- VPC Interface Endpoint

The main Difference is that the private link (PL) in VPC Gateway Endpoint connects to Default VPC Router, whereas in VPC Interface Endpoint, this PL connects to subnets directly. Additionally, VPC Gateway Endpoint supports only two services 1) DynamoDB and 2) S3. Apart from this max of the other services are covered by VPC Interface Endpoint.

Advantages:

- We are using VPC Gateway endpoint mainly for cost cutting because if we use NAT gateway, its costly service as compare to VPC Gateway Endpoint service. On Google, verify NAT Gateway charges in AWS. It shows $0.045 per NAT gateway hour plus data processing. Now search for VPC Endpoint. It shows $0.01 Pricing per VPC endpoint per AZ ($/hour) plus per GB data processing. (Its June 2019, when I am searching for charges).

- As we are using a private link in the same region apart from using internet, so, there is better security.

- We will get much better speed, with very low congestion.

This is a better option for those companies who are working only in a single region.

Disadvantages:

- We can not access buckets from other regions.

To do work with VPC Gateway Endpoint, first, we must have a functional VPC (means at least it's IGW, subnets, and route tables must be connected properly). In this practical, I have a functional VPC named 'webshack-vpc'. There is no need for NAT configuration in this practical.

STEPS FOR CREATING VPC GATEWAY ENDPOINT:

- Create a VPC named 'webshack-vpc'

- Launch one instance in public subnet and another in private subnet

- Login to the public instance named 'linux-jump-webserver'

- Verify AWS-CLI is installed or not

- Create a role with S3fullaccess policy

- Attach this role to the public instance

- Copy key from laptop to the instance in public subnet

- Login to DB Server Using copied key

- Attach the same role to DB server

- Create Endpoint

- Verify from PuTTY to list buckets

- (optional) Access using 'Putty Agent'

Step 1: Create a VPC named 'webshack-vpc':

Follow the below-mentioned link to create a VPC:

Step 2: Launch one instance in public subnet and another in private subnet:

We are launching an instance in Public Subnet, which we assume as a Web Server:

AWS ➔ Services ➔ EC2 ➔ Instances ➔ Launch Instance ➔ [*] Free Tier only ➔ Select 'Amazon Linux' AMI ➔ Instance Type: t2.micro ➔ Next ➔ Number of instances: 1 ➔ Network: select own created 'webshack-vpc' VPC ➔ Subnet: As we are creating this instance for subnet-1, which we are using for a web server, select us-east-1a ➔ Auto-assign Public IP: (already enabled as we have enabled it at the time of creation of public subnet) ➔ Next ➔ Next: tags ➔ Name: linux-jump-webserver ➔ Next: SG ➔ [*] Create a new Security Group ➔ Security group name: linux-access ➔ Description: linux-access is created to access linux instances ➔ Rule ➔ SSH: TCP: 22: Anywhere ➔ Review and Launch ➔ Launch ➔ Create a new key-pair OR select any existing key pair ➔ Launch Instances ➔ View Instances.

We are launching an instance in Private Subnet, which we assume as a Database Server:

AWS ➔ Services ➔ EC2 ➔ Instances ➔ Launch Instance ➔ [*] Free Tier only ➔ Select 'Amazon Linux' AMI ➔ Instance Type: t2.micro ➔ Next ➔ Number of instances: 1 ➔ Network: select own created 'webshack-vpc' VPC ➔ Subnet: As we are creating this instance for subnet-2, which we are using for a DB server, select us-east-1b ➔ Auto-assign Public IP: (by default it would be disabled as we did not put it enable at the time of creation of private subnet) ➔ Next ➔ Next: tags ➔ Name: Linux-db-server-pvt ➔ Next: SG ➔ [*] Create a new Security Group ➔ Security group name: linux-access ➔ Description: linux-access is created to access linux instances ➔ Rule ➔ SSH: TCP: 22: Anywhere (here we are putting anywhere, but in industry use CIDR range. So that this private instance could only be access privately) ➔ Review and Launch ➔ Launch ➔ Create a new key-pair OR select any existing key pair ➔ Launch Instances ➔ View Instances.

Step 3: Login to the public instance named 'linux-jump-webserver':

Run PuTTY ➔ Session: paste public IP of webserver instance from EC2Dashboard ➔ Expand SSH ➔ Select Auth ➔ Browse ➔ provide private key ➔ Open ➔ Username: ec2-user (which is the default user).

Step 4: Verify AWS-CLI is installed or not:

As it is an Amazon AMI, AWS-CLI is already installed in this AMI. In 'redhat' AMI we need to install this manually. We can verify this via checking binary of AWS using 'which' command:

$ which aws

/usr/bin/aws

If we get binaries in output, means AWS-CLI is installed on that instance.

But if we want to get the list of our buckets, this will prompt us an error for credentials, because we did not provide it any access key or secret access key:

$ aws s3 ls

Unable to locate credentials. You can configure credentials by running "aws configure".

Step 5: Create a role with S3fullaccess policy:

We will create a role because we do not want to provide access key and secret access key. So, for this, we need to navigate to IAM in AWS.

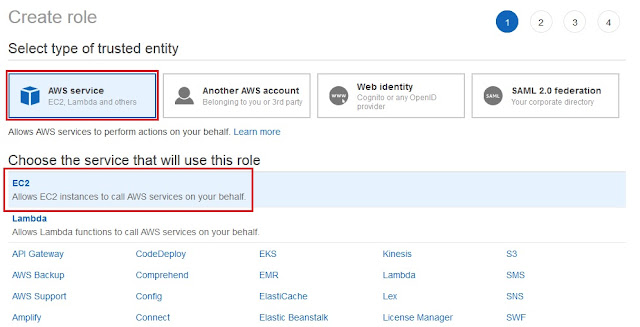

AWS ➔ Services ➔ AMI ➔ Roles ➔ Create role ➔ Select AWS Service (which is by default selected) ➔ Select EC2 ➔ Next: Permission ➔ In Search bar search 's3fullaccess' ➔ [*] AmazonS3FullAccess ➔ Next: Tags (if you want, provide a tag) ➔ Next: Review ➔ Role name: webshack-ec2-to-s3-full ➔ Provide a proper description ➔ Create role.

Step 6: Attach this role to the public instance:

Navigate to EC2:AWS ➔ Services ➔ EC2 ➔ Instances ➔ Select 'linux-jump-webserver' instance ➔ Actions ➔ Instance Settings ➔ Attach/Replace IAM Role ➔ IAM role: select role 'webshack-ec2-to-s3-full' ➔ Apply ➔ Close.

Now if we try to get list of all buckets, it will:

$ aws s3 ls

2019-05-26 21:23:20 mybucket-1-mumbai

2019-05-26 21:21:33 mybucket-1-nv

2019-05-26 21:22:41 mybucket-1-ohio

2019-05-26 21:22:15 mybucket-2-nv

Step 7: Copy key from laptop to the instance in public subnet:

Now, its time when we need to access the DB server. To access the DB server,

first, we need to login to our Jump Server/Bastian host and from there we can

access DB server. But even we are accessing the DB server from the jump server, then too

we must available its private key on jump server. In our case jump server is our Web

Server. So, either we copy the key to jump server or we can use Pagent.

First, we are doing practical via copying the key to Jump Server

or Bastian Host.

Locate keys folder in laptop ➔ select pem file of that key ➔

open it in notepad ➔ copy the content ➔

go to Web-server instance and using any editor create a file and paste this

content in that file and save it. In my case, I am creating a file at the home

folder of ec2-user and copying the data in it. You can use any location to create this key file.

$ pwd

/home/ec2-user

/home/ec2-user

$ vi new-linux-key

<paste the content and save file➔

<paste the content and save file➔

Now change the permission:

$ chmod 400 new-linux-key

-rw------- 1 ec2-user ec2-user 1666 May 26 23:08 new-linux-key

Step 8: Lgin to DB Server Using copied key:

We cannot login directly to the DB server, as this instance does not has any private key. So we use SSH to access our DB Server.Syntax:

$ ssh -i <key_name> ec2-user@<Private IP OR hostname of DB Server>

Example:

$ ssh -i new-linux-key ec2-user@192.168.0.10

Getting Access…

As this instance is also an AWS AMI, so AWS CLI is installed by default. But this DB server is neither attached with internet nor with the role so, we will not get any list of our buckets as a result.

$ aws s3 ls

Unable to locate credentials. You can configure credentials by running "aws configure".

This is simply asking for credentials.

Step 9: Attach the same role to DB server:

AWS ➔ EC2 ➔ Instances ➔ Select DB instance ➔ Actions ➔ Instance Settings ➔ Attach/Replace IAM Role ➔ IAM role: webshack-ec2-to-s3-full ➔ Apply ➔ Close.

Step 10: Create Endpoint:

AWS ➔ Services ➔ VPC ➔ Endpoint ➔ Create endpoint ➔ Service Category: AWS services ➔ Service Name: select us-east-1.s3 Gateway ➔ VPC: select 'webshack-vpc' ➔ select both Route Tables (select Public route table also, so that we could save cost) ➔ Policy: Full Access OR you can customize on your own ➔ Create endpoint.

We can verify endpoint is connected or not, just go to Route Tables and verify is there any 'pl-link' or not.

Step 11: Verify from PuTTY to list buckets

Go back to putty ➔ Make sure you have done SSH to DB server.If we ping google.com, this will not work, because we do not have internet access on this DB server. But still we can get the details of our buckets:

$ ping www.google.com

(no response, because still, we do not have an internet connection)

$ aws s3 ls

2019-05-26 21:23:20 mybucket-1-mumbai

2019-05-26 21:21:33 mybucket-1-nv

2019-05-26 21:22:41 mybucket-1-ohio

2019-05-26 21:22:15 mybucket-2-nv

We can see we are getting the output as all buckets in every region but we can get details about only one region buckets which is N. Virginia. To get the contents use command:

$ aws s3 ls s3://mybucket-1-nv

This will show all the files inside this bucket. But if we use the same command for another region, this will be stuck in a local loop.

Step 12: (optional) Access using 'Putty Agent':

We are using Pagent because we do not want to copy keys. Pagent will just forward the key which is using in the same session. Pagent will work only when both instances are Linux. If the Web server is Windows and DB server is Linux, then its compulsion to copy key to the web server instance.

This step is optional, we are using the same things via Putty Agent (Pagent).

Close Putty Session ➔ Open Pagent (this opens as hidden; right side in windows machines) ➔ Right Click on that icon ➔ View keys OR double click on that icon ➔ Add Key ➔ Select ppk file of private key ➔ Close ➔ Go to putty ➔ Session: put linux-jump-webserver public IP ➔ Expand SSH ➔ Click on Auth ➔ [*] Allow agent forwarding ➔ Browse: select private key ➔ Open.

Username: ec2-user

Delete the key which we paste as new-linux-key at the home directory of ec2-user.

Now SSH to DB server:

Syntax:

$ ssh ec2-user@<private-IP of DB server➔

Example:

$ ssh ec2-user@192.168.0.10

Getting Access….till we are in the same session.

Delete the key which we paste as new-linux-key at the home directory of ec2-user.

Now SSH to DB server:

Syntax:

$ ssh ec2-user@<private-IP of DB server➔

Example:

$ ssh ec2-user@192.168.0.10

Getting Access….till we are in the same session.

SPECIAL NOTE:

Do not fire command from root user as you use Putty Agent, because of key-forwarding in session. This session was started with Pagent and after that, if we do sudo, now our new session will start from root user. Now, permissions will be denied. For Linux to Linux, we can forward keys by using 'SSH agent'.

No comments:

Post a Comment